Illustrative Style Transfer with Deep Learning

- Paul Carter, PhD

- May 21, 2021

- 4 min read

Generative Adversarial Networks (GANs) provide a deep learning framework to generate completely new images now with the quality to make them in many cases indistinguishable from real images. The advancement of this field has also led to the movement towards computer generated artistic styles. One aspect of Generative Deep Learning that I was interested in exploring was the ability to take the artistic style from one image and transfer this style onto another image. My partner Ashlee is a professional digital illustrator (https://www.ashleespink.com) with one of her focuses being on children's picture books and so I was interested to see whether I could use a pre-trained convolutional neural network (CNN) model to transfer her illustrative style onto other images.

Neural Style Transfer

The idea behind Neural Style Transfer is to minimise a loss function which is a weighted sum of content loss and of style loss. The content loss is used because we want the image to contain the same reference as the target content image and the style loss to encapsulate the general style from the corresponding image. As will be seen below, the different loss functions are built using different parts of the pre-trained CNN. These network architectures contain information about richer features at deeper levels and therefore this region makes sense to use for evaluation of the content loss. In comparison the style loss will require information about correlation between feature channels between the output and style images. Application of Style Transfer on Ashlee's Digital Illustration

The implementation of the Neural Style Transfer was coordinated through the Tensorflow framework. For the initial application we use a content image which is a image of a Green Sea Turtle and Ashlee's children book style illustration of a Giraffe.

As mentioned above the process for generative style transfer will make use of the VGG19 CNN model which has been pre-trained on ImageNet. We can load this pre-trained model from Keras.

def get_model():

vgg = tf.keras.applications.vgg19.VGG19(include_top=False, weights='imagenet')

vgg.trainable = False

style_outputs = [vgg.get_layer(name).output for name in style_layers]

content_outputs = [vgg.get_layer(name).output for name in content_layers]

model_outputs = style_outputs + content_outputs

return models.Model(vgg.input, model_outputs)In the above we pass the layers which we will target for the style transfer loss function stored in style_layers and content_layers. The include_top=False indicates that we do not need to load the final dense layers weights which would have been used to result in classification of the images. For the content loss the key is to be able to minimise for images which have the same macro properties in the images, but with less importance given to pixel-by-pixel level comparisons. The pre-trained CNN's deeper layers have weights which contain information about high-level feature characteristics. For this model we use the second convolutional layer from the fifth block on the networks architecture.

content_layers = ['block5_conv2']The content loss is then the sum over the squared difference between the target and base content image outputs from the chosen layer.

def get_content_loss(base_content, target):return tf.reduce_mean(tf.square(base_content - target))For the style loss the correlated comparison between feature maps within a given layer of the network provides a good solution. This is done through building a Gram matrix of the dot product between all possible pairs of features in the layer. Calculating a Gram matrix for a set of layers throughout the network for both the base style image and generated image and compare their similarity. This being done whilst normalising out the variability of layer size and number of channels between layers aggregated over. In this model the aggregation is done over the first convolutional layer from each of the five blocks.

style_layers = ['block1_conv1','block2_conv1','block3_conv1','block4_conv1','block5_conv1']The style loss can then be written as:

def gram_matrix(input_tensor):

channels = int(input_tensor.shape[-1])

a = tf.reshape(input_tensor, [-1, channels])

n = tf.shape(a)[0]

gram = tf.matmul(a, a, transpose_a=True)

return gram / tf.cast(n, tf.float32)

def get_style_loss(base_style, gram_target):

height, width, channels = base_style.get_shape().as_list()

gram_style = gram_matrix(base_style)

return tf.reduce_mean(tf.square(gram_style - gram_target))The total loss is then the weighted sum over these two calculated loss

style_score *= style_weight

content_score *= content_weight

loss = style_score + content_score Finally the model performs the style transfer by iteratively minimising the combined loss function through Gradient Descent for a specified number of iterations, using pre-defined content and style weights. In this model a Adam optimiser is used

opt = tf.optimizers.Adam(learning_rate=5, beta_1=0.99, epsilon=1e-1)With a GPU we run the model through 1000 iterations and output the generated image every 200th iteration to see the progress of style transfer.

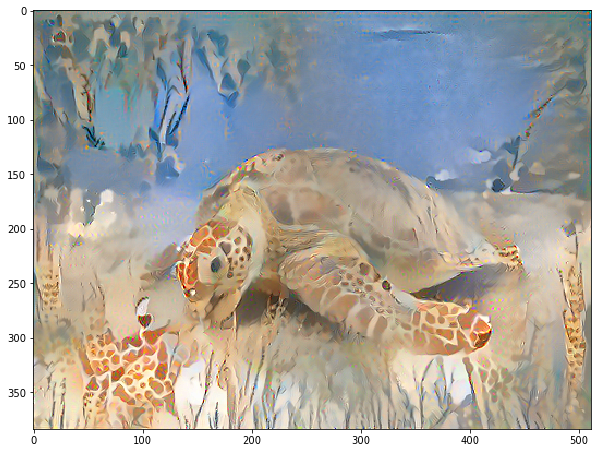

The final image after 1000 iterations can be seen below. The model has been able to capture the stylistic components of Ashlee's illustration well - inclusion of plants with the correct stylistic look and also transferring the giraffe's pattern into the image.

De-noising Generated Image to Mitigate Artefacts

The raw output image from the Neural Style Transfer suffers from small scale artefacts in the image, which I tried to mitigate using Non-local Means De-noising through the OpenCV library.

dst = cv2.fastNlMeansDenoisingColored(best,None,10,10,3,5)Varying the common parameters to find an optimal set-up for the image in question.

The presence of visual artefacts has been greatly reduced and we are left with a Green Sea Turtle which has the visual style of Ashlee's Giraffe illustration.

Other Examples

Comments